Every technical choice impacts the end experience. When I started building Playboards, my goal was to create seamless, real-time gaming sessions where the technology fades into the background and people can just focus on playing together—bringing traditional board games online without losing their warmth and immediacy.

I always wanted to learn Elixir/Phoenix, and building Playboards became my journey discovering how these technologies could serve that vision. This post dives deep into the ‘how’—Phoenix LiveView, SQLite, and the BEAM’s Actor Model—but also the ‘why’ behind each architectural decision.

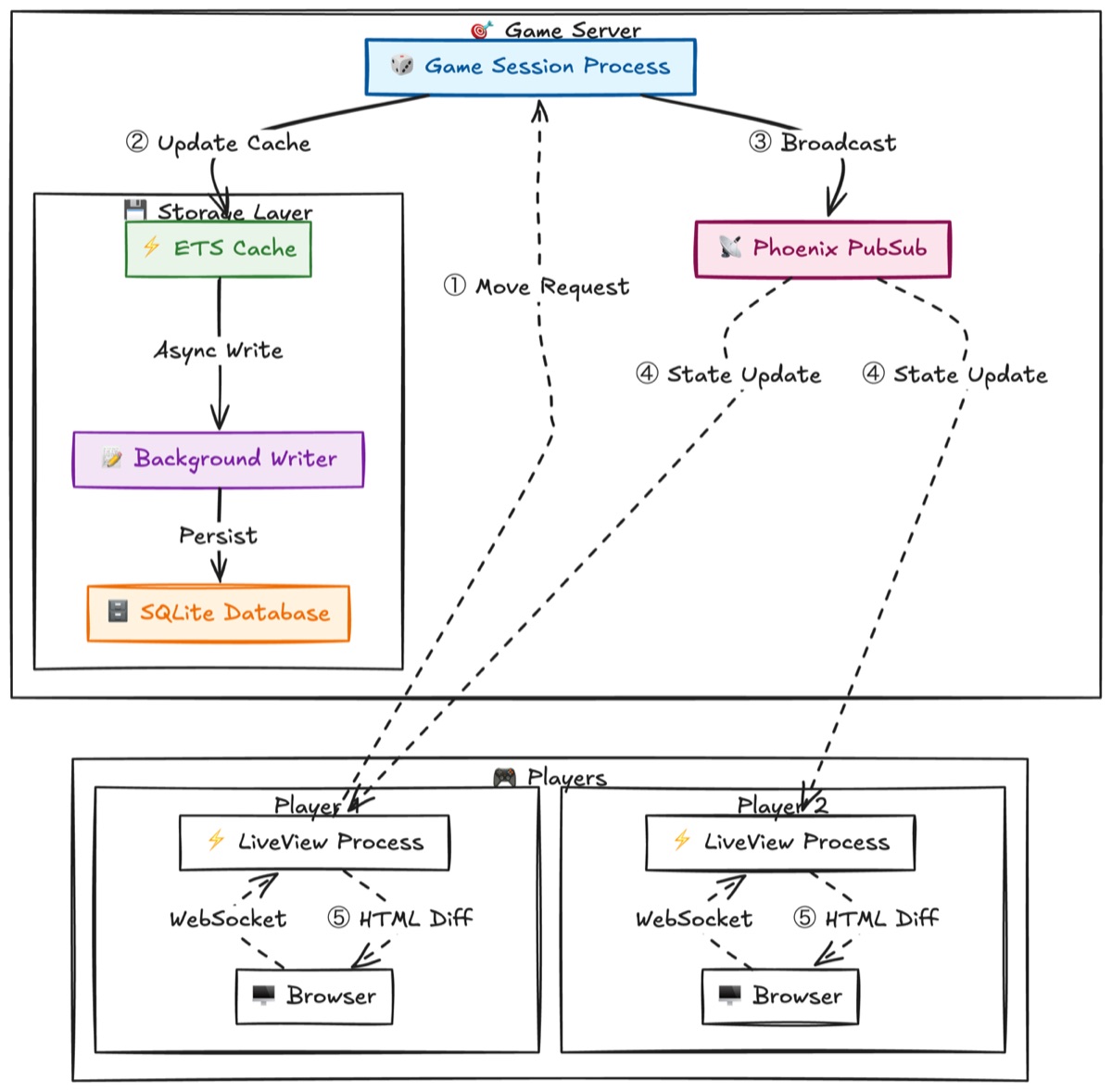

The Architecture Overview

Liveview model. Made with excalidraw

1. Phoenix LiveView: Single Platform for Complex Problems

Why I Avoided the Multi-Technology Stack

Single Developer Stack: My primary motivation was avoiding complexity. As a solo developer, I wanted a stack I could fully understand without expertise across multiple domains. The conventional JavaScript approach requires Redis, Pusher/Socket.IO, state management libraries, and complex orchestration. Elixir/Phoenix eliminates these decisions - the language already has excellent primitives for WebSockets, process-based state, pub/sub messaging, and fault tolerance built into the BEAM. This single-developer workflow reduces architectural decisions significantly.

Server-Side State Benefits: LiveView eliminates the “dual state” problem of SPAs. The server is the single source of truth, rendering HTML synchronized via WebSockets. Every move flows through server validation, and only HTML diffs are transmitted. No Redux, state synchronization libraries, or optimistic updates needed.

2. Actor Model Concurrency: BEAM’s Secret Weapon

Process Isolation for Game Sessions

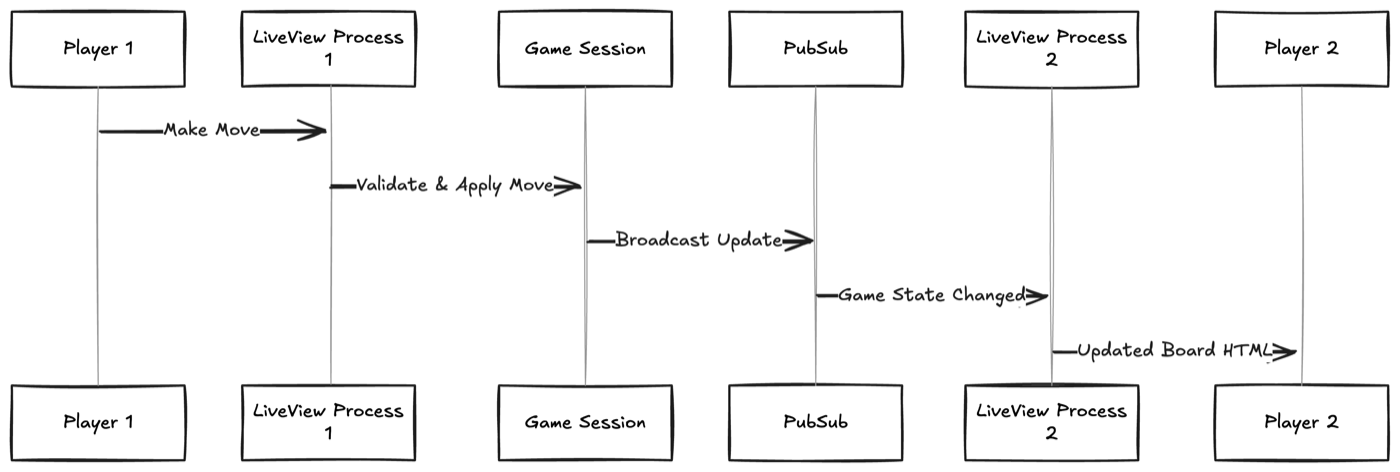

Each game runs in its own isolated process, communicating via message passing:

Move sequence. Made with excalidraw

Fault Tolerance: If one game crashes, others continue unaffected. LiveView processes automatically clean up when players disconnect.

Race Condition Prevention: The Actor Model serializes moves. The game session process handles them sequentially, preventing simultaneous moves.

Graceful Player Disconnection

When browsers close, LiveView processes terminate and trigger automatic cleanup. No manual session management needed.

3. WebRTC + Phoenix: Bridging P2P and Server-Centric Models

Voice chat needs P2P connections for low latency, but game state requires centralized authority. My solution: Phoenix handles WebRTC signaling while maintaining centralized game state. JavaScript manages WebRTC through event queuing to prevent race conditions. Game state flows through Phoenix PubSub, voice through WebRTC, coordinated via LiveView.

4. ETS: The Sub-Millisecond Database

Game state needs constant reads. Traditional databases add 1-5ms network latency per query. ETS provides ~0.1ms response times by storing data in BEAM memory.

Hybrid Strategy: Hot data in ETS, background GenServer batches writes to SQLite for durability. 2-hour TTL prevents memory leaks. ETS supports concurrent reads without locks.

5. SQLite + Fly.io: The Contrarian Database Choice

Co-locating database with application eliminates network hops. SQLite runs in the same Phoenix process.

Cost Impact: Traditional stack ~$70/month, my stack $5/month.

“Database as Library”: SQLite as embedded library, not separate service.

Why This Works: Game sessions expire (2 hours), keeping data manageable. Read-heavy workload suits SQLite. WAL mode eliminates single-writer limitations - readers don’t block writers. Geographic distribution via Fly.io edge deployments.

6. Real-Time Synchronization Without Tears

Server-side validation with turn-based serialization prevents race conditions. The system validates game state and current player before processing moves.

LiveView’s automatic reconnection with ETS+SQLite means seamless rejoin after network issues.

7. Scaling Insights: From Prototype to Production

Current Limits: ETS is node-local. WebRTC signaling needs same-node coordination.

Scaling Solutions: :global or Horde for cross-node sessions. Phoenix.PubSub supports multi-node distribution. Regional voice servers.

Horizontal Scaling Strategy

Clean separation between GameSessions and Storage makes sharding straightforward:

8. Development Surprises & Lessons

Unexpected Wins: DOM-based testing simulates moves elegantly. Hot code reloading updates active games instantly. Process isolation prevents cascading crashes.

If Starting Over: Earlier WebRTC integration. More ETS caching for profiles/history. Event sourcing for replay features.

The Broader Philosophy: Reducing Complexity

The key insight from building Playboards is that complexity reduction often trumps cutting-edge technology. By choosing Phoenix LiveView over SPAs, I eliminated entire categories of problems: state synchronization, client-server coordination, and complex frontend state management. Not having to manage complex infrastructure made working with this stack genuinely enjoyable.

Core Principles: Simplicity over sophistication. Single platform over microservices. Process isolation for fault tolerance. Authoritative server state. Hybrid storage (memory + disk).

Conclusion

This architecture delivered on the original vision: a simple, affordable platform for people to connect over traditional board games. By choosing a simpler stack—Phoenix LiveView over SPAs, SQLite over distributed databases, Actor Model processes over microservices—I could spend less time on infrastructure and more time thinking about what makes a game night enjoyable and what games to add. That’s the real win.

The key takeaway: not every real-time app needs distributed databases or client-side state management. For gaming workloads with natural boundaries, mature technology used cleverly delivers better performance, lower costs, and less complexity—which translates to a more reliable and enjoyable experience for the people who use it.

Want to see this architecture in action? Check out the Playboards project page or explore the source code.

Tags: #elixir #phoenix #liveview #webrtc #sqlite #gaming #architecture #realtime